Recently, I experimented with DuckLake, a new data lakehouse format developed by the creators of DuckDB. I also tested its integration with dbt to evaluate the pros and cons of modeling data directly in the lake, instead of relying on a separate data warehouse.

Similar to existing open data lakehouse formats like Iceberg and Delta Lake, DuckLake takes advantage of cost-effective object storage services such as S3 to store Parquet files. However, DuckLake recognizes the limitations of object storage for metadata operations, and thus uses a relational database like PostgreSQL for managing its catalog.

Pros, cons and dealbreakers

DuckLake is simple yet powerful. It supports multiple schemas, file-based encryption, partitioning, pruning of old files, and descriptions for tables and columns.

Every change happens through a commit called “snapshot”: this makes it possible to time travel and see the full history of the lake. However, this also means that every file in the lake has to be managed according to the catalog, which requires compatible clients.

So far, only DuckDB with the ducklake extension can interpret the SQL catalog to manage files in the object storage bucket and edit the SQL catalog itself.

Only a few DuckDB integrations support the ducklake extension for now:

Upcoming integrations are:

Currently, it’s not practical to ingest data into the lake through tools like dlt, Airbyte, or Fivetran. Similarly, querying tables for analytical use cases with tools like Cube or Superset is not yet supported.

Due to its simplicity, DuckLake has almost no permission system. This means that with direct access to a DuckLake, users can query all tables and columns without restrictions, and developers can accidentally overwrite production data. The only permissions that can be configured within DuckLake are read-only or read-write access for both:

- database users accessing the SQL catalog

- object storage keys accessing the bucket.

First iteration: DuckDB catalog, local storage

Let’s dive into the technical implementation of a dbt-managed DuckLake, which I divided into two sections: the first focuses on experimenting with local files, while the second relies on managed services, to simulate a production-grade deployment.

Throughout this guide, I’ll use the following naming conventions:

- DuckLake: a data lake composed of a SQL catalog database and an object storage bucket

- ducklake: name of the DuckDB extension

- datalake: folder and name of the dbt project, alias given to the attached DuckLake

Install dependencies

I’m using uv instead of running pip and dbt directly because it’s much easier to manage dependencies and load variables from an environment file.

Let’s install uv and DuckDB in our systems.

For Arch Linux, yay -S uv duckdb-bin is enough.

Set up dbt

Let’s create a folder for our temporary data lake location and initialize a new dbt project.

mkdir -p /tmp/datalake_files

uv init --bare datalake

cd datalake

uv venv

uv add dbt-duckdb

source .venv/bin/activate

dbt initWhen prompted, choose a name for your project, like “datalake” and select 1 to create a dbt profile for the DuckDB adapter. Since dbt always initializes a new project in a child directory, let’s move regular and hidden files to the parent folder. Let’s also move the “profiles.yml” file created under $HOME to the repo:

mv datalake/* .

mv datalake/.* .

mv $HOME/.dbt/profiles.yml .

rm -r datalakeLet’s initialize a new git repo and add an ignore rule for credentials and user UUID.

echo ".env" >> .gitignore

echo ".user.yml" >> .gitignore

git init .

git add -A

git commit -m "Initialized dbt project"In the future, you can fully re-initialize your dbt environment in CIs or other computers by simply running:

uv venv

uv pip install -r pyproject.toml

source .venv/bin/activateLet’s edit the profiles file to configure the development target:

datalake:

target: dev

outputs:

dev:

type: duckdb

extensions:

- ducklake

attach:

- path: "ducklake:/tmp/datalake_files/catalog.duckdb"

alias: datalake

options:

data_path: /tmp/datalake_files/storage

database: datalakeTransform data

Now it should be possible to execute dbt run.

The local catalog will be be created as “/tmp/datalake_files/catalog.duckdb” and parquet files will be located under “/tmp/datalake_files/storage”.

You can see this with the tree /tmp/datalake_files command:

/tmp/datalake_files

├── catalog.duckdb

└── storage

└── ducklake-01975031-1ac0-76c1-91e5-d181901d4d9f.parquet

2 directories, 2 filesViews on the lake

dbt show

You can use dbt to preview tables and views:

dbt show --select my_first_dbt_modelDuckDB UI

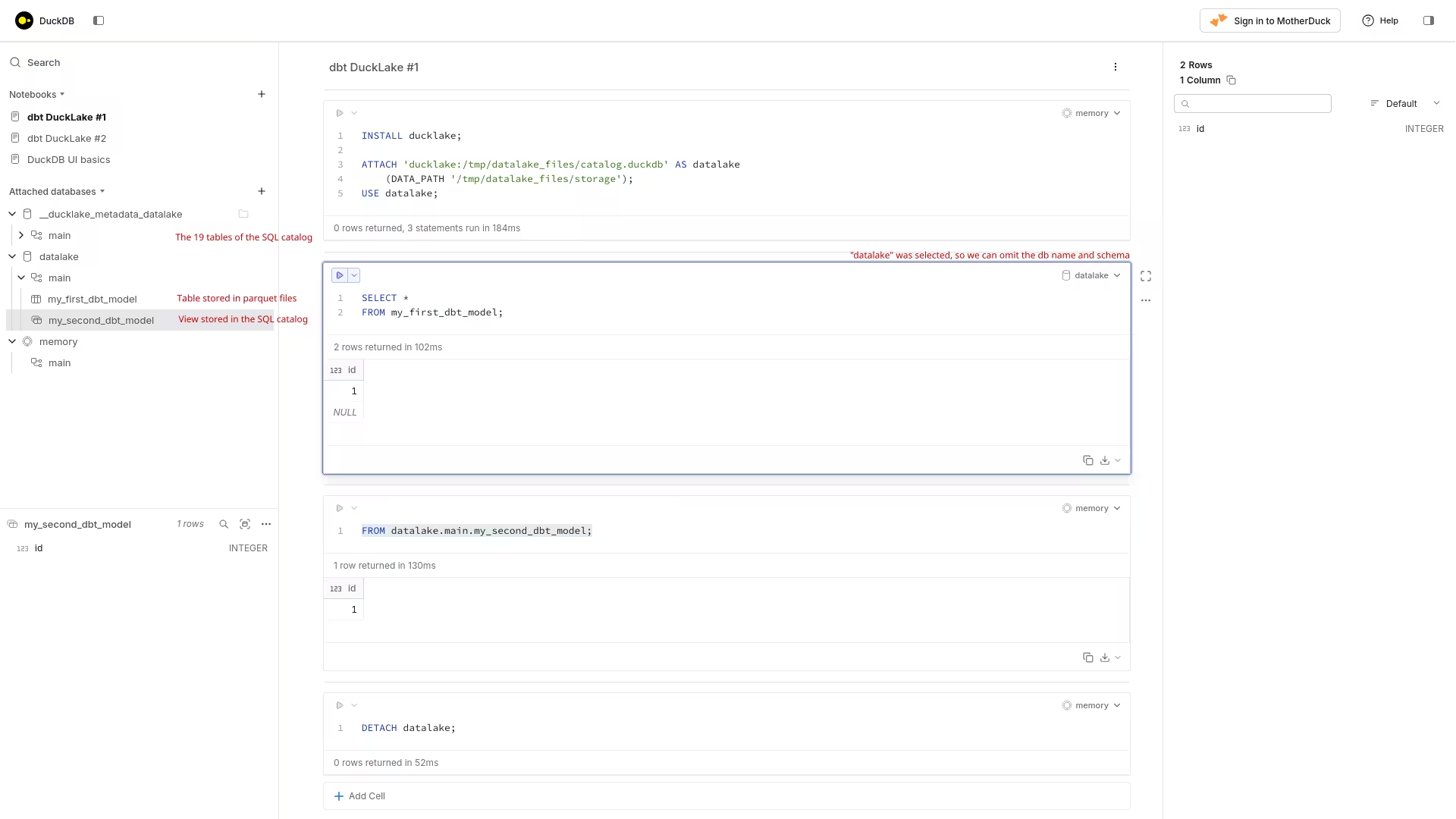

You can start the web UI of DuckDB by running duckdb --ui

From the UI, create a new notebook, then load the DuckLake extension, attach the catalog database and specify the location of files. Pay attention to the following:

- for local files, specify a

DATA_PATHthat is absolute, or one that is relative to the folder where you launched theduckdbcommand - use the same DuckLake alias that you specified in your dbt config, otherwise you won’t be able to query your views, as they reference a different alias

INSTALL ducklake;

ATTACH 'ducklake:/tmp/datalake_files/catalog.duckdb' AS datalake

(DATA_PATH '/tmp/datalake_files/storage/');

USE datalake;

FROM datalake.main.my_second_dbt_model;

USE memory;

DETACH datalake;

Make sure to detach your DuckLake or stop your DuckDB client before launching dbt again, to avoid lock errors:

Runtime Error

IO Error: Failed to attach DuckLake MetaData "__ducklake_metadata_dev_ducklake" at path + "data/catalog.duckdb"Could not set lock on file "/tmp/datalake_files/catalog.duckdb": Conflicting lock is held in /usr/bin/duckdb (PID 38407) by user jack. See also https://duckdb.org/docs/stable/connect/concurrencySecond iteration: PostgreSQL catalog, S3 storage

In this second iteration, we’ll use a third-party relational database and object storage for our data lake.

We are going to implement the medallion architecture using dbt to show how data can be curated and modeled for consumption.

Let’s remember that the primary goal of dbt is not to ingest data from different source into the lake, but rather to transform tabular data that is already in there.

This means that we’ll first need to manually load data in the lake using DuckDB.

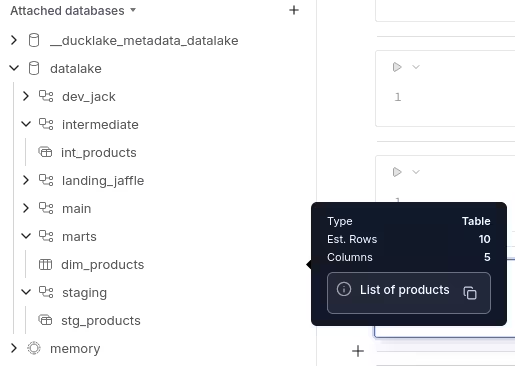

We are going to use these layers for the lake:

- landing_SOURCE: schemas for ingestion from external sources

- staging: dbt-managed, production schema for data cleaning

- intermediate: dbt-managed, production schema for data modeling

- marts: dbt-managed, production schema for BI/ML/LLM consumption

- dev_USER: dbt-managed, personal development schemas for all models

Set up the storage bucket

Configure a new object storage bucket for the data lake and associate to it a key with read and write permissions.

S3compare shows a list of object storage providers and their prices. Many providers offer a free tier and some of them don’t even charge for egress, making it appealing for data that is queried often. If you prefer to experiment locally, garage is fantastic.

Set up the catalog database

Configure a new PostgreSQL or MariaDB database, and associate to it a user with read and write permissions.

Both Neon and Supabase offer a free tier for their managed PostgreSQL databases.

Set up environment variables

Even if dbt or duckdb cannot natively read variables from environment files, we can store our secrets in a local “.env” file to make them reusable by both services:

# example for local postgresql db

PGHOST=127.0.0.1

PGPORT=5432

PGDATABASE=catalog

PGUSER=dbt

PGPASSWORD=XXXXXX

# example for local garage bucket

DUCKDB_S3_USE_SSL=FALSE

DUCKDB_S3_ENDPOINT=127.0.0.1:3900

AWS_REGION=garage

AWS_ACCESS_KEY_ID=IDIDID

AWS_SECRET_ACCESS_KEY=RWRWRW

URL_STYLE=path

BUCKET_NAME=datalake

# used by AWS CLI

AWS_ENDPOINT_URL='http://localhost:3900'Then, re-alias the dbt and duckdb commands so that these variables are exported before each run:

alias "dbt=uv run --env-file $PWD/.env dbt"

alias "duckdb=uv run --env-file $PWD/.env duckdb --ui"Ideally, these aliases should be loaded in your shell’s configuration file, like ”~/.zshrc”.

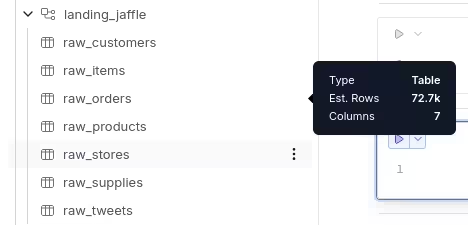

Load data

We can use a simple command to generate mock csv data for a sandwich chain:

uvx jafgen

echo "jaffle-data/" >> .gitignore

git add .gitignoreThis will generate, under the “jaffle-data” folder:

Permissions Size User Date Modified Git Name

.rw-r--r-- 49k jack 2025-06-15 19:33 -I raw_customers.csv

.rw-r--r-- 8.9M jack 2025-06-15 19:33 -I raw_items.csv

.rw-r--r-- 10M jack 2025-06-15 19:33 -I raw_orders.csv

.rw-r--r-- 923 jack 2025-06-15 19:33 -I raw_products.csv

.rw-r--r-- 477 jack 2025-06-15 19:33 -I raw_stores.csv

.rw-r--r-- 2.7k jack 2025-06-15 19:33 -I raw_supplies.csv

.rw-r--r-- 4.0M jack 2025-06-15 19:33 -I raw_tweets.csvTo load this data in our lake, launch duckdb or duckdb --ui and run:

-- make sure that env is set

SELECT getenv('PGHOST');

-- idempotent cleanup

DETACH DATABASE IF EXISTS datalake;

DROP SECRET IF EXISTS __default_postgres;

DROP SECRET IF EXISTS __default_s3;

-- this env variable is not loaded automatically by duckdb

CREATE SECRET (

TYPE s3,

URL_STYLE getenv('URL_STYLE')

);

-- we must specify manually the database alias and the bucket name, as env vars are not supported in parameters

-- the "ENCRYPTED" option will set up the lake storage to be encrypted

INSTALL ducklake;

ATTACH 'ducklake:postgres:' AS datalake

(DATA_PATH 's3://datalake' ENCRYPTED);

USE datalake;

-- load files as tables in the "landing_jaffle" schema

CREATE SCHEMA IF NOT EXISTS datalake.landing_jaffle;

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_customers AS FROM read_csv('jaffle-data/raw_customers.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_items AS FROM read_csv('jaffle-data/raw_items.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_orders AS FROM read_csv('jaffle-data/raw_orders.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_products AS FROM read_csv('jaffle-data/raw_products.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_stores AS FROM read_csv('jaffle-data/raw_stores.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_supplies AS FROM read_csv('jaffle-data/raw_supplies.csv');

CREATE OR REPLACE TABLE datalake.landing_jaffle.raw_tweets AS FROM read_csv('jaffle-data/raw_tweets.csv');

-- show loaded tables

FROM (SHOW ALL TABLES)

WHERE database = 'datalake';

If you have the AWS CLI installed, you can check that these files exist by running:

uv run --env-file .env aws s3 ls "s3://datalake/"Adjust dbt

Sources

Let’s add the “landing_jaffle” schema and tables as dbt sources, so they can be referenced through the source() macro:

version: 2

sources:

- name: jaffle_store

loader: duckdb_manual

database: datalake

schema: landing_jaffle

tables:

- name: raw_customers

- name: raw_items

- name: raw_orders

- name: raw_products

- name: raw_stores

- name: raw_supplies

- name: raw_tweetsProfiles

Edit the profiles file to use the env variables we specified before.

For demonstration only, I’ll store in the local profiles file the targets for both development and production, and I’ll use the merge key << to load common configurations:

datalake:

target: dev

outputs:

_common: &common

type: duckdb

extensions:

- ducklake

- httpfs

- name: cache_httpfs

repo: community

secrets:

- type: postgres

name: catalog_credentials

host: "{{ env_var('PGHOST') }}"

port: "{{ env_var('PGPORT') }}"

user: "{{ env_var('PGUSER') }}"

password: "{{ env_var('PGPASSWORD') }}"

database: "{{ env_var('PGDATABASE') }}"

- type: s3

endpoint: "{{ env_var('DUCKDB_S3_ENDPOINT') }}"

use_ssl: "{{ env_var('DUCKDB_S3_USE_SSL') }}"

url_style: "{{ env_var('URL_STYLE') }}"

region: "{{ env_var('AWS_REGION') }}"

key_id: "{{ env_var('AWS_ACCESS_KEY_ID') }}"

secret: "{{ env_var('AWS_SECRET_ACCESS_KEY') }}"

attach:

- path: "ducklake:postgres:"

alias: datalake

options:

meta_secret: catalog_credentials

data_path: "s3://{{ env_var('BUCKET_NAME') }}"

encrypted: true

database: datalake

outputs:

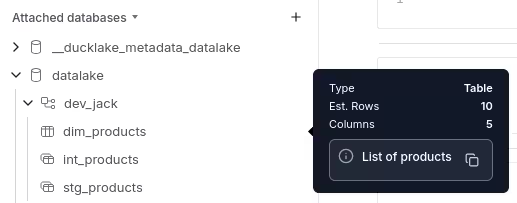

dev:

<<: *common

# every developer gets their own schema (e.g. "dev_jack")

schema: "dev_{{ env_var('USER', 'common') | lower }}"

prod:

<<: *common

# fallback schema for production models

schema: "main"Schemas

Edit the models section in “dbt_project.yml” to associate different schemas to models located in different subfolders:

# [...]

models:

# default settings

datalake:

+materialized: view

# write table and column comments

+persist_docs:

relation: true

columns: false

# folder-specific settings

staging:

+schema: staging

+docs:

node_color: brown

intermediate:

+schema: intermediate

+docs:

node_color: silver

marts:

+schema: marts

+materialized: "{{ 'table' if target.name == 'prod' else 'view' }}"

+docs:

node_color: gold

flags:

send_anonymous_usage_stats: falseCreate this macro to make dbt use the above schemas only when --target prod is specified, and use dev_USER otherwise.

{% macro generate_schema_name(custom_schema_name, node) -%}

{{ generate_schema_name_for_env(custom_schema_name, node) }}

{%- endmacro %}Models

Let’s create the folders we need and a couple of models to showcase the lineage functionality of dbt.

mkdir -p models/{staging,intermediate,marts}First, we should clean and rename the data in the “staging” layer:

with

source as (

select * from {{ source("jaffle_store", "raw_products") }}

where sku is not null

),

cleaned as (

select

-- id

sku as product_id,

-- string

name as product_name,

lower(type) as product_type,

description as product_description,

-- numbers

cast(price * 0.01 as decimal(18, 2)) as product_price,

from source

)

select * from cleanedIn the “intermediate” layer, we can apply joins and other transformations to enrich our data:

select

-- id

product_id,

-- string

product_name,

product_type

product_description

-- numbers

product_price

from {{ ref("stg_products") }}

-- add eventual joins hereThen, in the “marts” layer we present dimensions and facts to end users, so that they can be queried directly or joined to form a semantic layer:

select

-- grain

product_id,

-- dimensions

product_name,

product_type,

product_description,

product_price

-- measures

from {{ ref("int_products") }}Now, let’s create a file containing reusable definitions for our models and columns:

# dbt project definitions

## Homepage

{% docs __overview__ %}

# dbt + DuckLake

An example dbt project built on top of DuckLake.

Learn more about [dbt](https://github.com/Hiflylabs/awesome-dbt) and [DuckDB](https://github.com/davidgasquez/awesome-duckdb) from the community.

{% enddocs %}

## Models

### Staging

{% docs stg_products %}

Cleaning and renaming of product data.

{% enddocs %}

### Intermediate

### Marts

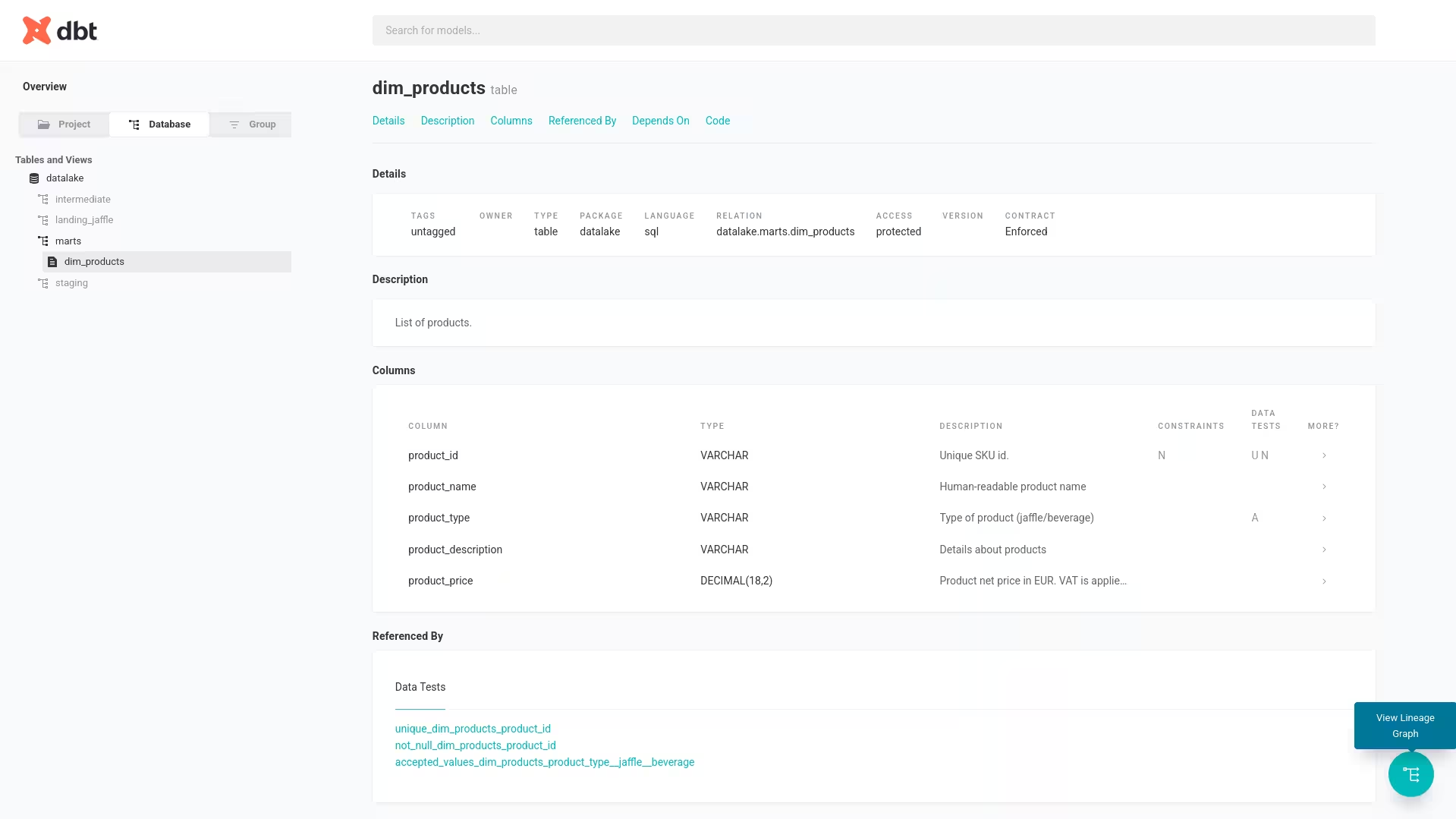

{% docs dim_products %}

List of products.

{% enddocs %}

## Columns

### Keys

{% docs product_id %}

Unique SKU ID

{% enddocs %}

### Dimensions

{% docs product_name %}

Human-readable product name

{% enddocs %}

{% docs product_type %}

Type of product (jaffle/beverage)

{% enddocs %}

{% docs product_description %}

Details about products

{% enddocs %}

{% docs product_price %}

Product net price in EUR.

VAT is applied at checkout based on sale location.

{% enddocs %}

### MeasuresFinally, let’s use these documentation blocks to describe our models:

version: 2

models:

- name: stg_products

description: '{{ doc("stg_products") }}'

columns:

- name: product_id

description: '{{ doc("product_id") }}'

data_tests:

- unique

- not_null

# [...]

- name: int_products

description: '{{ doc("dim_products") }}'

columns:

- name: product_id

description: '{{ doc("product_id") }}'

data_tests:

- unique

- not_null

# [...]

- name: dim_products

description: '{{ doc("dim_products") }}'

# this can be used to verify column types and not-null constraints

contract:

enforced: true

columns:

- name: product_id

description: '{{ doc("product_id") }}'

data_type: varchar

data_tests:

- unique

- not_null

constraints:

- type: not_null

warn_unsupported: false

config:

meta:

primary_key: true

- name: product_name

description: '{{ doc("product_name") }}'

data_type: string

- name: product_type

description: '{{ doc("product_type") }}'

data_type: string

data_tests:

- accepted_values:

values: [jaffle, beverage]

- name: product_description

description: '{{ doc("product_description") }}'

data_type: string

- name: product_price

description: '{{ doc("product_price") }}'

data_type: decimal(18,2)Transform data

Now we can transform the data in our development environment using dbt:

dbt run --select +dim_products --fail-fastThe output should be:

23:44:03 Running with dbt=1.9.6

23:44:04 Registered adapter: duckdb=1.9.4-dev21

23:44:05 Found 5 models, 6 data tests, 7 sources, 429 macros

23:44:05

23:44:05 Concurrency: 1 threads (target='dev')

23:44:05

23:44:05 1 of 3 START sql view model dev_jack.stg_products .............................. [RUN]

23:44:05 1 of 3 OK created sql view model dev_jack.stg_products ......................... [OK in 0.21s]

23:44:05 2 of 3 START sql view model dev_jack.int_products .............................. [RUN]

23:44:05 2 of 3 ERROR creating sql view model dev_jack.int_products ..................... [ERROR in 0.04s]

23:44:05 CANCEL query model.datalake.int_products ....................................... [CANCEL]

23:44:05

23:44:05 Runtime Error in model int_products (models/intermediate/int_products.sql)

Parser Error: syntax error at or near "product_price"

23:44:06

23:44:06 Finished running 3 view models in 0 hours 0 minutes and 1.00 seconds (1.00s).

23:44:06

23:44:06 Completed with 2 errors, 0 partial successes, and 0 warnings:

23:44:06

23:44:06 Runtime Error in model int_products (models/intermediate/int_products.sql)

Parser Error: syntax error at or near "product_price"

23:44:06

23:44:06 Done. PASS=1 WARN=0 ERROR=1 SKIP=1 TOTAL=3Ooops, it looks like we forgot some commas in the intermediate model.

After we fix the problematic code, dbt run completes successfully.

Then we should ensure that the model passes all the data tests we defined:

dbt test --select +dim_products --fail-fast

Now, let’s execute a production run by specifying the “prod” target:

dbt run --select +dim_products --target prodAfter the transformation completes, tables and views appear under the relevant data lake schemas:

Additional views on the lake

dbt docs

Since we properly documented our models, we can generate a website that lists all model properties and their relationship:

dbt docs generate --target prod

dbt docs serve

These pages are generated on the fly from three “artifact” files under “target/”, so it’s easy to host the documentation as a static website:

- index.html

- catalog.json

- metadata.json

Read-only credentials

Until now, we have used read-write permissions to modify both the catalog database and storage bucket. Users performing data analyses shouldn’t need write permissions, so we should generate a set of read-only credentials for them:

- new object storage keys which are granted read-only access to the storage bucket

- new database users which are granted read-only access to the catalog tables

Once connected with read-only credentials (e.g. from DuckDB), read commands still succeed, but write commands fail:

-- read succeeds

FROM datalake.landing_jaffle.raw_products;

-- write to catalog fails

COMMENT ON TABLE datalake.landing_jaffle.raw_products IS 'write test';

-- Invalid Input Error: Attempting to fetch from an unsuccessful query result

-- Error: TransactionContext Error: Failed to commit: Failed to commit DuckLake transaction: Failed to update tag information in DuckLake: Failed to execute query "UPDATE "public"."ducklake_tag" SET "end_snapshot" = "update_data_48cf3ee0-109d-4048-a3ec-7e4a7aab2d5d"."end_snapshot" FROM "update_data_48cf3ee0-109d-4048-a3ec-7e4a7aab2d5d" WHERE "ducklake_tag".ctid=__page_id_string::TID": ERROR: permission denied for table ducklake_tag

-- write to storage fails

COPY

(FROM datalake.landing_jaffle.raw_products)

TO 's3://datalake/new_file.parquet'

(FORMAT parquet);

-- HTTP Error: Unable to connect to URL http://127.0.0.1:3900/datalake/new_file.parquet?uploads=: Forbidden (HTTP code 403)Compatible apps

As I mentioned before, for now DuckDB CLI/UI and DIY scripts are the only way to access a DuckLake.

I’ll list here services and apps that feature native DuckLake integrations or that expose a DuckLake through protocols like Arrow Flight SQL (or Postgres wire, if you really hate arrows and prefer to transpose your ducks in a row).

If you are hacking on a DuckLake integration, drop me a message!

Wrap-up

In less than half an hour, we saw how to set up a “vendor-agnostic” data lake based on three services:

- object storage for tabular files

- relational database for metadata

- git server for transformation flows and documentation

However, it quickly emerged how the lack of third-party integrations and protocol compatibility effectively brings back the lock-in factor when it comes to managing or accessing the data, i.e. every operation happens through some flavor of DuckDB, which at least it’s open source.

Regarding direct ELT on the lake, the lack of granular write permissions remains a footgun that cannot be ignored. As always, remember to take backups!